Hacking an Assistive Brain Computer Interface for Art

2013-2015

Modifying our EEG device (built with OpenBCI) to be installed into an interactive art display in London

The Brainwriter project is an open-source, collaborative effort to help a paralyzed graffiti artist paint again via a non-invasive, low cost brain-computer interface. The advanced stage of ALS makes eye tracking his primary form of communication, but artistic expression has become difficult because of the exhaustion caused by the necessary blinking. Our hypothesis has been that combining EEG and eyetracking technology can unlock an extra dimension of control for the artist and allow him to create his amazing art again.

This project has been in collaboration with OpenBCI and Not Impossible Labs. It is a continuation of the awesome original Eyewriter Project, designed and built by the Graffiti Research Lab (featuring leaders of creative coding leaders Zach Leiberman, Theo Watson and James Powderly) in 2006, also in collaboration with the Ebeling Group.

Overview (read: this is a long post)

This is a large, multi-part project so this post will be split into designing and building the EEG device itself, designing software for drawing, building a software platform for EEG-based experiments, and building an interactive installation using the system that is currently on an 18-month tour of the world. All necessary source code links are at the bottom of aggregated at the bottom of the page.

The EEG Device

The Brainwriter is an exploratory process of combining eyetracking and EEG signals to identify the equivalent of mouse clicks. Choosing the eyetracking hardware was fairly straightforward: the two main leaders in the space of Tobii and EyeTribe, and since we needed a robust API we chose Tobii. But while the eyetracking hardware is a small device that clips to a computer monitor, choosing a suitable EEG that must connect firmly to a human is a much more involved task.

The EEG is a wearable device that needs to contact the skin in order to read the electrical potential of the brain's activity through the skull. Many parameters need to be considered both for the wearer and the experiment. For the person, is it comfortable, obstructive, sweat-collecting? For the sake of accessing meaningful data, are we collecting enough sensor data from the right places?

In the case of somebody who is paralyzed in a bed, the user concerns are even more acute. Anything rigid in the back of the head becomes painfully uncomfortable quickly, so the Emotiv Epoc was ruled out as a possibility by experimentation. Because the forehead is such an important part of the artist's communication, it would muffle him to put a thick band across his head, which ruled out the BrainBand, which was also limited by number of sensors.

To discover the right data to access for this project, the hardware would need customizability with the placement and number of electrodes. After exhausting all commercially available options, the team concluded that we had to build our own EEG.

At it's core, an EEG device is a measurement system that captures analog signals, converts them to digital data on a chip, then relays the information into a computer. Building all three parts independently is a significant amount of work, and a problem that has been solved many times before. Hence, we looked for pre-designed components that we could combine. Although there are some notable legacy projects such as OpenEEG, the team identified two main modern options: either building with the NeuroSky ThinkGear sensor modules (which would still require us to build an microprocessor-based separate unit to relay the information back to a computer), or try out the brand new OpenBCI EEG system. Because the spirit of this project is open source, we contacted the OpenBCI team and started a collaboration. [trivia: our relationship started over Twitter]

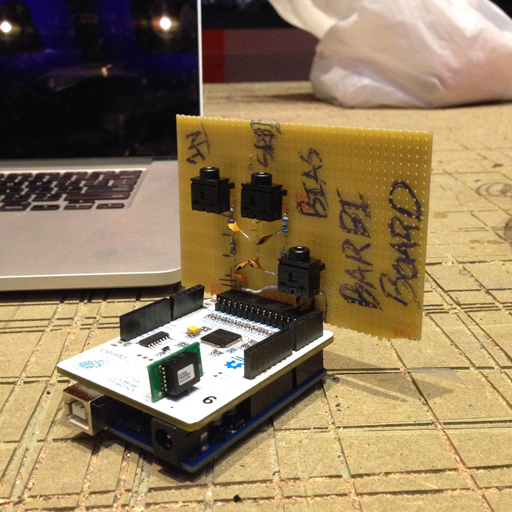

The OpenBCI V1 board: handling analog sensors in and streaming digital information back to a computer

OpenBCI is an open-source, open-hardware, crowd-funded initiative to empower anybody to access their brainwaves. After a successful Kickstarter campaign in 2013, they have been shipping an Arduino-based system that encompasses both parts of the data acquisition challenge: converting analog sensor data into digital signal and building the interface for computer software to access the data. However, while the OpenBCI kit does ship with a set of loose electrodes, designing a chronically wearable sensor kit for a hospital room would be a different challenge.

EEG signals are notoriously difficult data to capture. The signal is measured in the 10-100 microvolts range, and the conductor material used to read the signal from the scalp also acts as an antenna for the myriad sources of electromagnetic interference, and the slightest physical disturbance (for example, a bumped cable) can introduce erroneous noise. In the case of designing an electrode system for a person lying next to respirator, we needed a way to minimize the unshielded cable length while also maximizing skin contact. From our research, the best choice of electrode was the Olimex active electrode.

A slight complication of using the Olimex sensors was that we had to design an extra printed circuit board to interface between the OpenBCI board and the Olimex sensors. The design is below.

Furthermore, later in this project (see The Digital Futures Exhibit) we would learn that significant analog filtering would be necessary. When trying to make this system work in the Barbican Centre in London, the amount of EMI was orders of magnitude more than we had experienced in the New York lab settings, as well as being at 50Hz (our knotch filters were set to 60Hz). In the field, we hacked together a quick n' dirty analog filter system using nested RC filters, but future versions of the Olimex+OpenBCI daughterboard will include more appropriate filters.

Finally, designing the physical housing for the EEG component has been delayed until our experimentation gives us confidence where exactly we need to place the sensors. In the meantime, we've been getting good mileage out of a thin Nike headband. However, when we put it on the artist, he quipped "this makes me loop like a rapper."

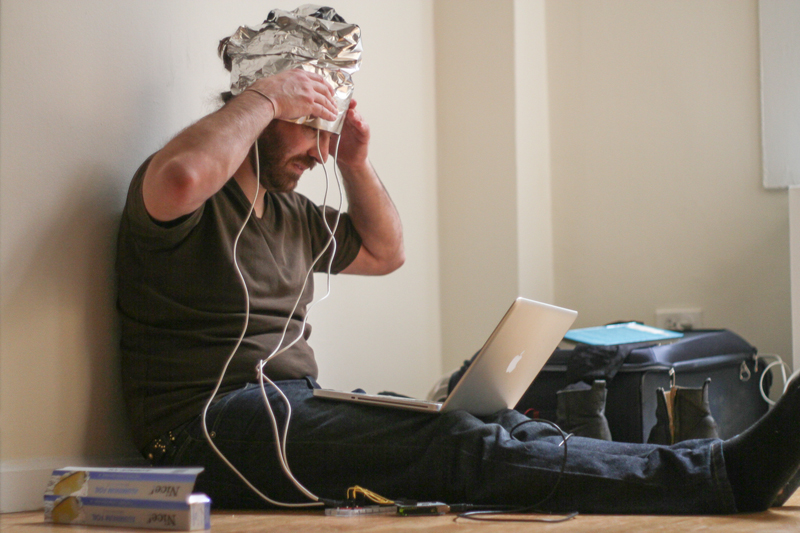

Even wild ideas were considered for the noise reduction problem when designing a wearable that measures tiny signals.

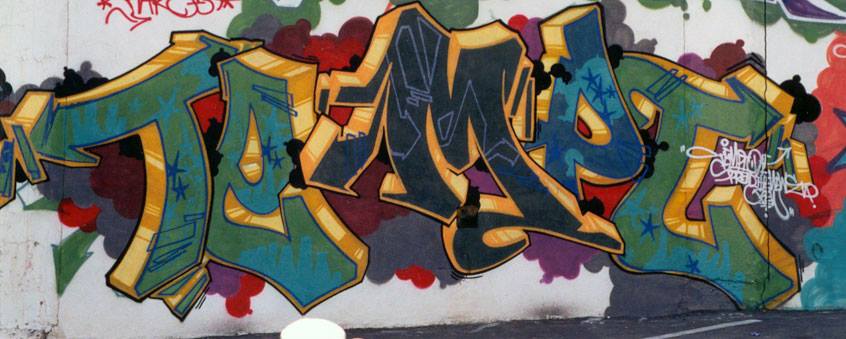

The Drawing Software

The software we create needs to let Tempt's master hand flow again. The picture above is one of his incredible pieces from Los Angeles the 1990's.

"My goal is to get your autograph" is what I told Tempt after I first met him. To facilitate that, we need to build drawing software that is designed to work with eyetracking hardware.

The software needs several key features:

Point-by-Point sketching with easy deletion: Even the best eyetracking hardware comes with inaccuracies and glitches, and Tempt is a perfectionist. Free-hand drawing is unfortunately not an option with existing hardware, and so we must build the software based on a line-by-line system.

The ability to bend lines: Straight lines alone cannot recreate the depth of Tempt's work.

The ability to save and share work: This is more than an infrastructure issue, this about making work with pride that can be revisited by him and others.

Drawing virtually on a real wall: Part of Tempt's work is reactionary to the environment, much like Jazz he says. So giving him a pure white background to start from misses a piece of the process. Taking images of real walls and including them into the software package might help inspire his work.

Bringing the messiness of the real world into the digital world: In the original Eyewriter work, the GRL team (Zach, James and ) learned that one of Tempt's favorite features was the "drip," a digital re-creation of the occasional paint line that falls in real work.

One of our exploration's was to get the blending and messiness of watercolors. Using the extraordinary open source work of the digital artist Kynd (Github link), we were able to do some early tests:

Kynd's code allows for natural looking flow of watercolor, but to be used with Tempt, we need to allow for point-to-point drawing.

The platform of choice is OpenFrameworks, a C++ infrastructure that beautifully wraps OpenGL

The working prototype, incorporating point-to-point navigation to allow Tempt his maximal control, bending lines, different color fills, and images of real walls to allow for interaction.

We have been building many pieces but this but have not yet had the chance to properly test this softare with him. To date it has been developed all in NYC, but we will hopefully see him in the first few months of 2015.

The Experimentation Platform

What does it take to use EEG data to make a real-time control signal? This is the eventual goal of the project, and here is where we can walk through some our thinking.

First, the most naive: can we simply access alpha and beta waves in a controllable way? The answer is no. Below is a screenshot of a simple app we made (in OpenFrameworks) that shows a running history of alpha and beta waves. Across several people, we saw little to no success of intentional modulation of alpha (green) or beta (blue). We also built a simple app that makes a subject do a series of mental math problems, but detected no reliable alpha/beta difference. Clearly we have to do something more sophisticated.

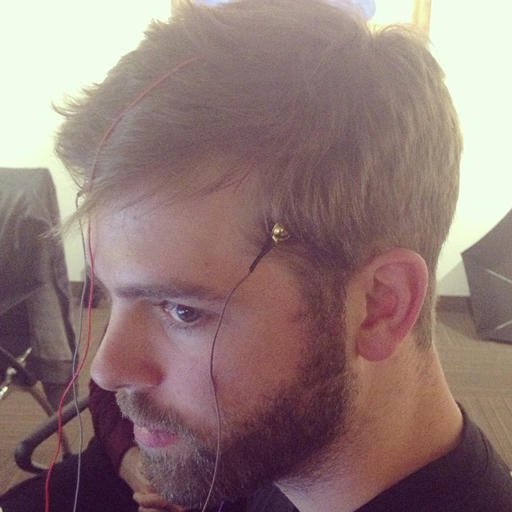

An incremental step forward is to train a classifier to differentiate two states. Conor Russomanno (co-founder and President of OpenBCI) and I experimented with a motor visualization task. Putting two sensors on the left side of his head, one approximately on the motor cortex region that controls his arm, we trained a 10-minute set of Conor visualize shaking his right arm on varying period intervals. A simple Support Machine Vector classifier yielded a 78% accuracy from this data.

Conor Russomanno, CEO and Co-Founder of OpenBCI, collaborating with me as we worked on a motor-visualization-based EEG indicator

While this first classification attempt yielded promising results, it was far from complete. 78% accuracy on independent time trials could certainly be boosted with some statistical knowledge about the experiment constraints (ie, the classifier runs once per second, but if the system knew that he thinks of moving his arm for at least 3 seconds). However, it is unclear that this is the optimal data approach.

Traditionally, most EEG-based control signals have relied on some sort of event-related potential. For example, a light blinking into a subject's eyes at a specific frequency will elicit an EEG signal at that exact frequency if measured from the occipital lobe in the back of the head. So if a subject is presented with multiple blinking lights at multiple frequencies, where he chooses to direct his attention, without even moving his eyes, will create a detectable signal in the occipital lobe. For a full write up in how to do this technique yourself, check out Chip Audette's <a href="http://eeghacker.blogspot.com/2014/06/controlling-hex-bug-with-my-brain-waves.html" target="_blank">awesome EEG Hacker blog and video of him controlling a hex robot</a>. But in our project we want to facilitate a master artist make visual art, so blinking patterns would be a distraction and certainly not something that he could use for long periods of time.

One of the core members of the Brainwriter team is Dr. David Putrino, a professor at Cornell University's Burke Rehabilitation Hospital. He identified a technique developed by a colleague of his, Dr. Jeremy Hill, which uses stereo audio in an analogous manner to the blinking lights as described above. Dr. Hill's December 2012 paper titled Communication and control by listening: toward optimal design of a two-class auditory streaming brain-computer interface showed promise that this might indeed be the correct approach. Furthermore, the same lab published a followup paper in 2014 showing that natural stimuli could work just as well as the artificial beeping patterns. Therefore we feel that exploring natural-sound auditory ERP-based classifier could be a fruitful direction.

To explore this avenue, we built a testing platform in OpenFrameworks (source code below), and are acquiring datasets to see if we can replicate Dr. Hill's laboratory technique's performance in the real world using an open source EEG based on OpenBCI.

The Digital Futures Exhibit

By nature of working with the high profile Not Impossible Labs, we were invited by the Barbican in London to build an art installation for a traveling exhibition that would also feature Google Creative Lab, Lady Gaga, Chris Milk for 18 months.

The goal of the installation is to build awareness for locked-in syndrome while telling a story of technological development. The center piece of our installation was designed to be a game to demonstrate the combination of eye tracking and eeg recordings. The game was designed by master game designers with an expertise in exotic hardware: Kaho Abe and Ramsey Nasser.

Title screen for the game in the interactive display

The concept of the game was that two players worked together to shoot lasers out of their eyes to shoot down the bad robots. We used Tobii Rex devices to track the players' eyes, and an OpenBCI-based EEGs to read the strength of the alpha/beta waves to control the power of the lasers.

The design of the installation was designed by the brilliant Paul Freeth.

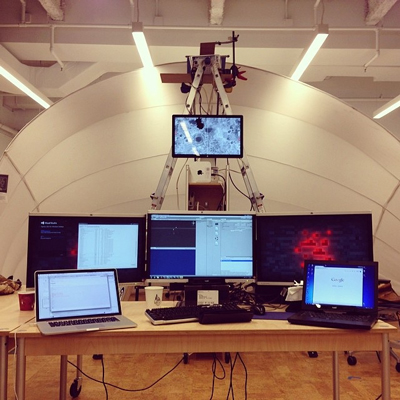

A peek into Kaho's Game Innovation Laboratory, where we tinkered for many evenings to develop the game software and controlling hardware working. This was a big push on the development of the OpenBCI-based EEG system.

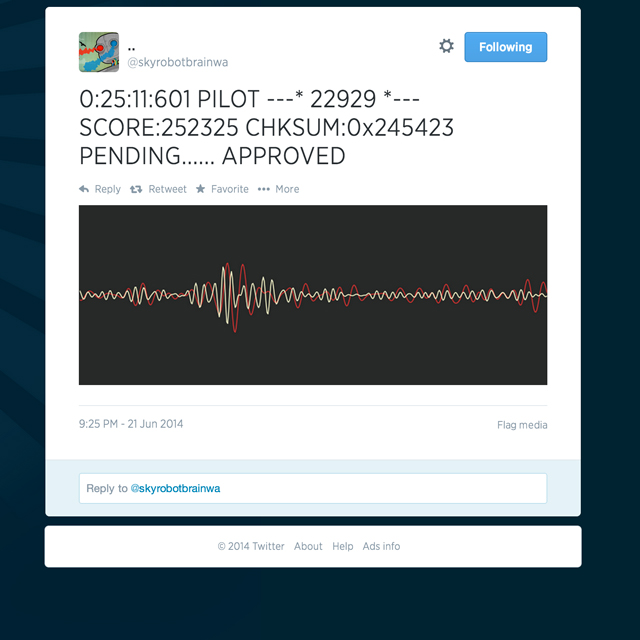

As a bonus, the installation tweets a sample of the user's brainwaves! We tweeted 10,000 brainwaves in the first month of operation at the Barbican Centre in London. This was done with the support of Mel Van Londen

The press seemed to like what we built :) Although this article was hyperbolic and had several inaccuracies, it's still nice to see people get excited by the direction we're pushing. This is from a TechRepublic article.

<h2> The Team </h2>

It was a pleasure to work with the people in the photo below. I left the team in March 2015 but they will surely go on to do meaningful and good work. From left to right, Sam Bergen, David Putrino, Nichole Caruso (who worked closely with David to organize an art fundraiser for this project at the Whitespace gallery in spring 2014), myself, Javed Gangjee and Mick Ebeling.

The Source Code

This is coming soon but these are the links I need to post:

Github for the OpenFrameworks OpenBCI module

Github link for the auditory-based experimentation framework

Python Notebook files for post-processing [still working on uploading]